Kubernetes Scaling Demystified: A Deep Dive into Different Approaches on Google Kubernetes Engine (GKE)

Kubernetes, the industry-leading container orchestration platform, excels at managing dynamic workloads. Efficient scaling ensures optimal resource utilization, performance, and cost-effectiveness, especially within the Google Cloud Platform (GCP) ecosystem. This blog post provides a comprehensive overview of Kubernetes scaling strategies, leveraging the provided architecture for visual clarity and offering technical insights and best practices gleaned from our Cloud Solutions and Practice team at Tech Mahindra. We'll focus on how these strategies are implemented and optimized within the Google Kubernetes Engine (GKE).

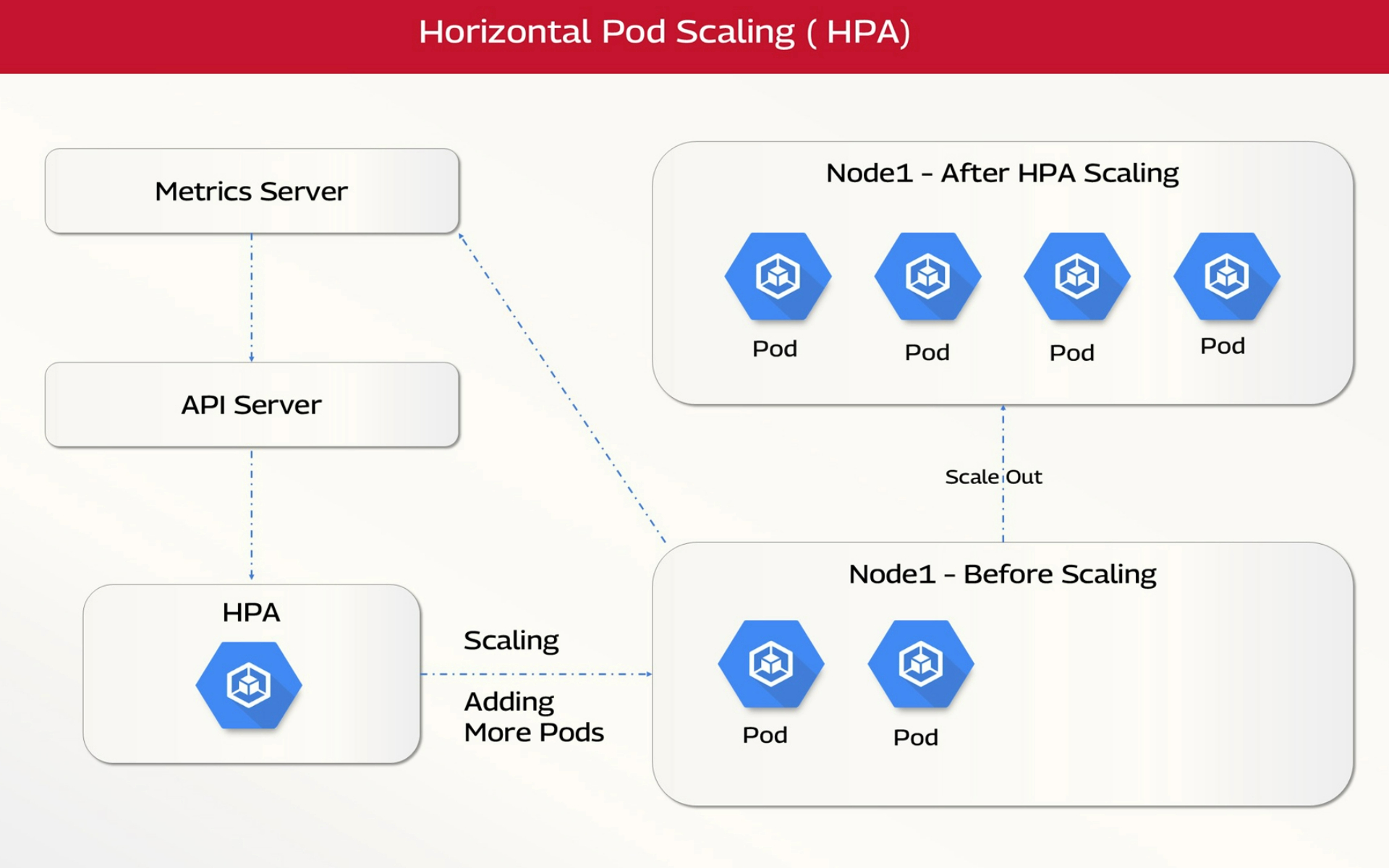

1. Horizontal Pod Autoscaler (HPA) on GKE

- Concept: HPA automatically adjusts the number of pod replicas based on observed CPU utilization, memory usage, or custom metrics. It's the most common scaling mechanism in Kubernetes and works seamlessly with GKE.

Process:

- The metrics server (often deployed as a standard component in GKE) continuously collects resource usage data from running pods.

- The HPA controller compares these metrics against predefined target thresholds (e.g., average CPU utilization of 80%).

- Based on the comparison, the HPA instructs the Kubernetes API Server to scale the number of pods up or down. GKE's integration makes this process highly efficient.

- GKE Considerations: When using HPA on GKE, consider using the horizontal pod autoscaling metrics pipeline for improved performance and scalability, especially for large clusters. You can also integrate it with cloud monitoring for richer metrics and alerts.

Example: A web application running on GKE experiencing increased traffic will see higher CPU utilization. HPA detects this and automatically increases the number of pods that handle the load. As traffic subsides, HPA scales the deployment back down.

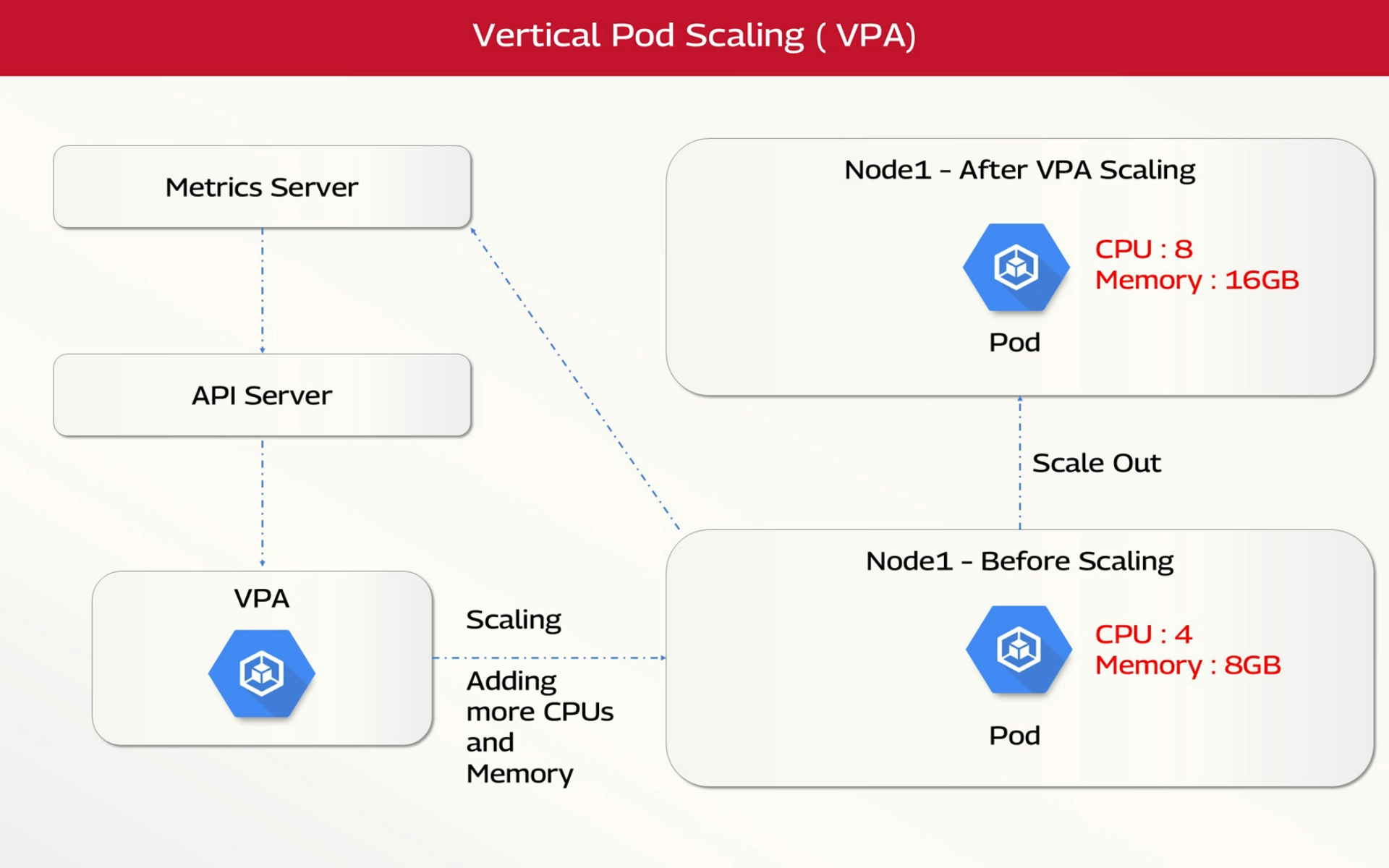

2. Vertical Pod Autoscaler (VPA) on GKE

- Concept: VPA automatically adjusts individual pods' resource requests and limits (CPU and memory) based on their historical and current usage. This optimizes resource allocation and prevents starvation or waste within your GKE cluster.

Process:

- The metrics server collects resource usage data.

- The VPA controller analyzes this data and recommends optimal resource requests and limits for each pod.

- The VPA then updates the pod specifications via the Kubernetes API Server. This usually involves restarting the pod with the new resource allocations. GKE manages this process efficiently.

- GKE Considerations: VPA can be beneficial in GKE for optimizing resource usage and cost efficiency, especially for workloads with varying resource demands. Ensure proper configuration to avoid unnecessary pod restarts.

Example: If a pod consistently uses more memory than its request but less than its limit within a GKE node, VPA might increase the memory request to a more appropriate value, ensuring the pod has sufficient resources without over-provisioning.

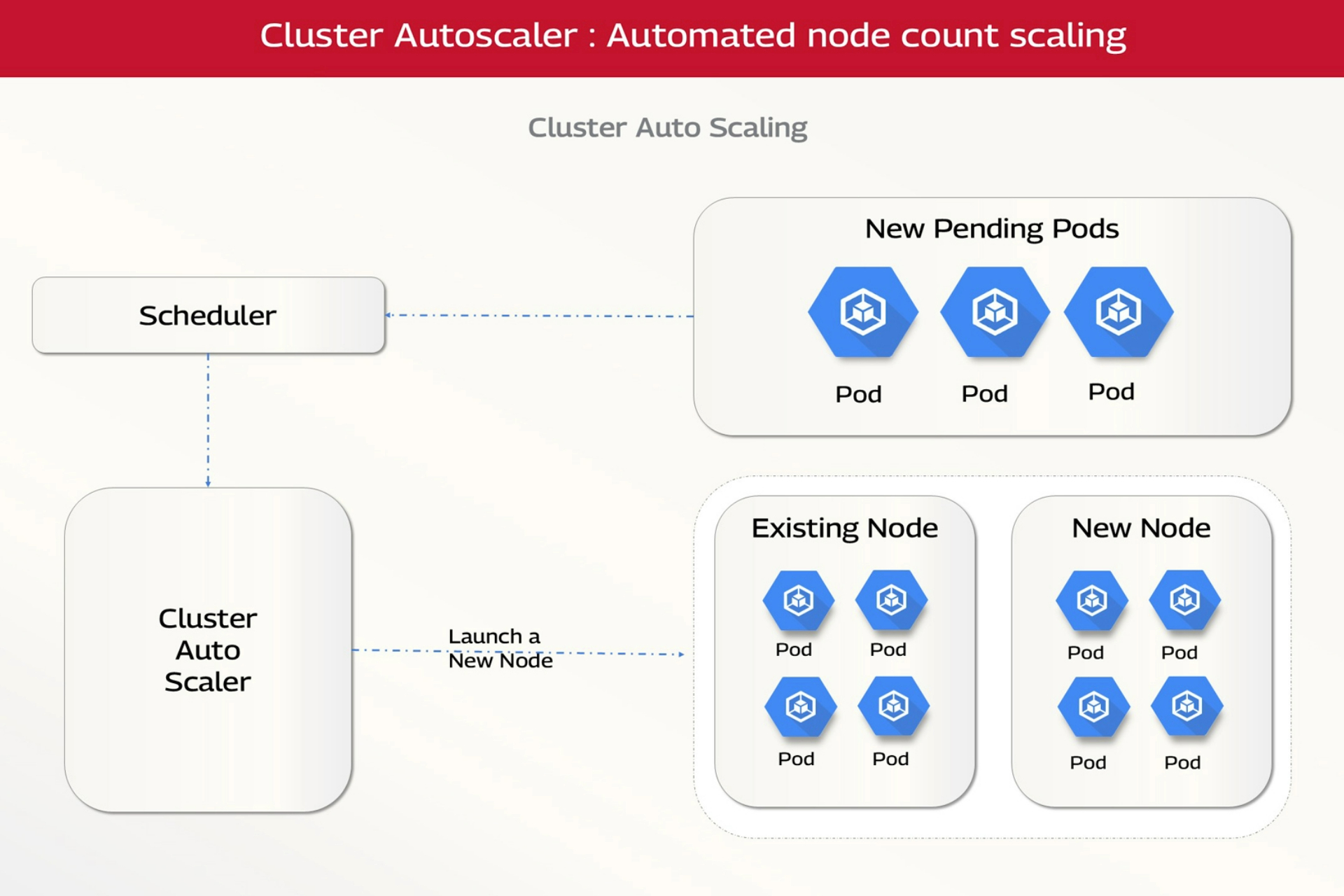

3. Cluster Autoscaler on GKE

- Concept: The Cluster Autoscaler adjusts the number of worker nodes in your GKE cluster. It adds nodes when pods are pending due to insufficient resources and removes nodes when they are underutilized. This is crucial for scaling the entire cluster's capacity.

Process:

- The cluster autoscaler monitors resource requests of pending pods and the utilization of existing nodes.

- If pending pods are not scheduled due to resource constraints, the cluster autoscaler interacts directly with the GKE API to provision new Google Compute Engine (GCE) instances as nodes.

- Conversely, if nodes are consistently underutilized, the cluster autoscaler will remove them to save costs. GKE's integration simplifies this process significantly.

- GKE Considerations: The cluster autoscaler is a key component of GKE's autoscaling capabilities. Configure it to work with your desired node pools, instance types, and scaling limits. Consider using node pool autoscaling for fine-grained control.

Example: Many pods might become pending during a large batch processing job on GKE. The cluster autoscaler detects this and adds new GCE nodes to accommodate the workload. Once the job is complete and nodes become underutilized, the cluster autoscaler scales the cluster back down.

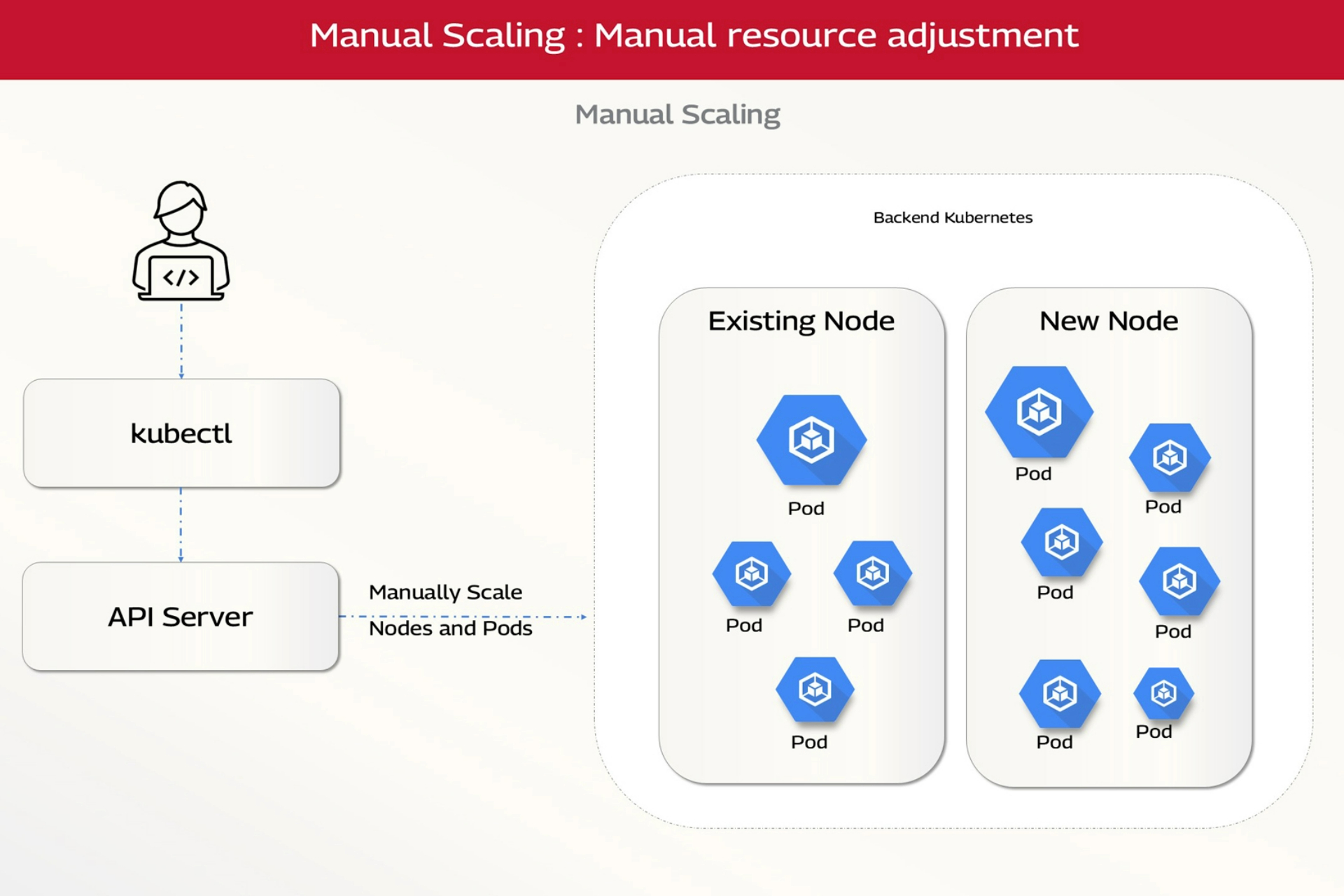

4. Manual Scaling on GKE

- Concept: While autoscaling is preferred, manual scaling lets you directly control the number of pods or nodes using kubectl commands or the Google Cloud Console.

Process: You execute commands like kubectl scale deployment --replicas= to change the number of pods or use the cloud console to adjust node counts in your GKE cluster.

- GKE Considerations: Manual scaling can be helpful for specific testing scenarios or one-time adjustments. However, autoscaling is generally recommended for production workloads on GKE.

Example: Manual scaling can be a simple and effective approach for predictable, one-time scaling events or specific testing scenarios within your GKE environment.

5. Predictive Scaling (and Custom Metrics) on GKE

- Concept: Predictive scaling leverages historical data and machine learning to anticipate future resource needs and proactively scale your application running on GKE. This is often combined with custom metrics.

Process:

- A monitoring system (like cloud monitoring) collects historical data on resource usage, application metrics (e.g., requests per second, queue length), and other relevant factors.

- A machine learning model is trained on this data to predict future resource requirements.

- An autoscaler (like HPA, but potentially more sophisticated) uses these predictions to scale the application preemptively. Tools like KEDA (Kubernetes Event-driven Autoscaling) can scale based on custom metrics, integrating well with GKE.

- GKE Considerations: Leverage cloud monitoring and other GCP services to collect and analyze metrics. KEDA can be deployed on GKE to enable advanced autoscaling scenarios.

Example: An e-commerce platform running on GKE might use predictive scaling to anticipate increased traffic during a sales event. By analyzing historical sales data within BigQuery, the system can proactively scale up the application before the event begins, ensuring a smooth user experience.

Conclusion

Kubernetes, especially when leveraged through GKE, offers a powerful and diverse toolkit of scaling strategies to address a wide range of workload characteristics. By understanding these strategies and selecting the appropriate tools, organizations can optimize resource utilization, guarantee application performance, and control costs effectively within the GCP environment. Choosing the right combination of scaling mechanisms depends on your specific application needs and operational requirements.

At Tech Mahindra, our Cloud Solutions and Practice team assists organizations in navigating the complexities and implementing optimal scaling solutions for their Kubernetes deployments on GKE. We can help you leverage the full potential of GKE's autoscaling capabilities to achieve maximum efficiency and performance for your applications.

Krishnaprasad is a Principal Solutions Cloud Architect with 19+ years of experience in cloud infrastructure and modernization, including 7+ years specializing in Google Cloud Platform (GCP). He architects and implements robust GCP solutions, focusing on cloud infrastructure. At Tech Mahindra, he leads practice and competency development, collaborates with OEMs on cutting-edge solutions, and advises clients on GCP and hybrid cloud deployments.More

Krishnaprasad is a Principal Solutions Cloud Architect with 19+ years of experience in cloud infrastructure and modernization, including 7+ years specializing in Google Cloud Platform (GCP). He architects and implements robust GCP solutions, focusing on cloud infrastructure. At Tech Mahindra, he leads practice and competency development, collaborates with OEMs on cutting-edge solutions, and advises clients on GCP and hybrid cloud deployments. His expertise spans practice building, strategic partnerships, and complex deal support, driving value for clients and Tech Mahindra.

Less

Chakravarthy Komaravolu, in his current role, heads the Cloud Solutions for the EMEA region and leads the Infrastructure Modernization Practice for Google Cloud. He has 25+ years of rich IT industry experience in various functional roles, technology platforms, practice, and solution development initiatives. He specializes in providing customized solutions for complex requirements, focusing on business outcomes and leveraging modern technologies.More

Chakravarthy Komaravolu, in his current role, heads the Cloud Solutions for the EMEA region and leads the Infrastructure Modernization Practice for Google Cloud. He has 25+ years of rich IT industry experience in various functional roles, technology platforms, practice, and solution development initiatives. He specializes in providing customized solutions for complex requirements, focusing on business outcomes and leveraging modern technologies. A seasoned expert in Infrastructure Modernization and jointly building go-to-market solutions across a range of technology partners

Less