An Event-Driven Architecture for Linear Broadcast Networks

Times are changing and globally linear television broadcasters are under increasing pressure to evolve to an increasingly automated, event-driven, and data-led operation. Doing so helps better serve core stakeholders comprising both audiences (via hyper-personalized content offerings) and advertisers (by offering highly targeted audience segments in the current cookie-less world). The current technology trends we’re seeing indicate a shift towards software-defined technology workflows from previous legacy on-prem and bespoke-hardware-based technology stacks to enable this.

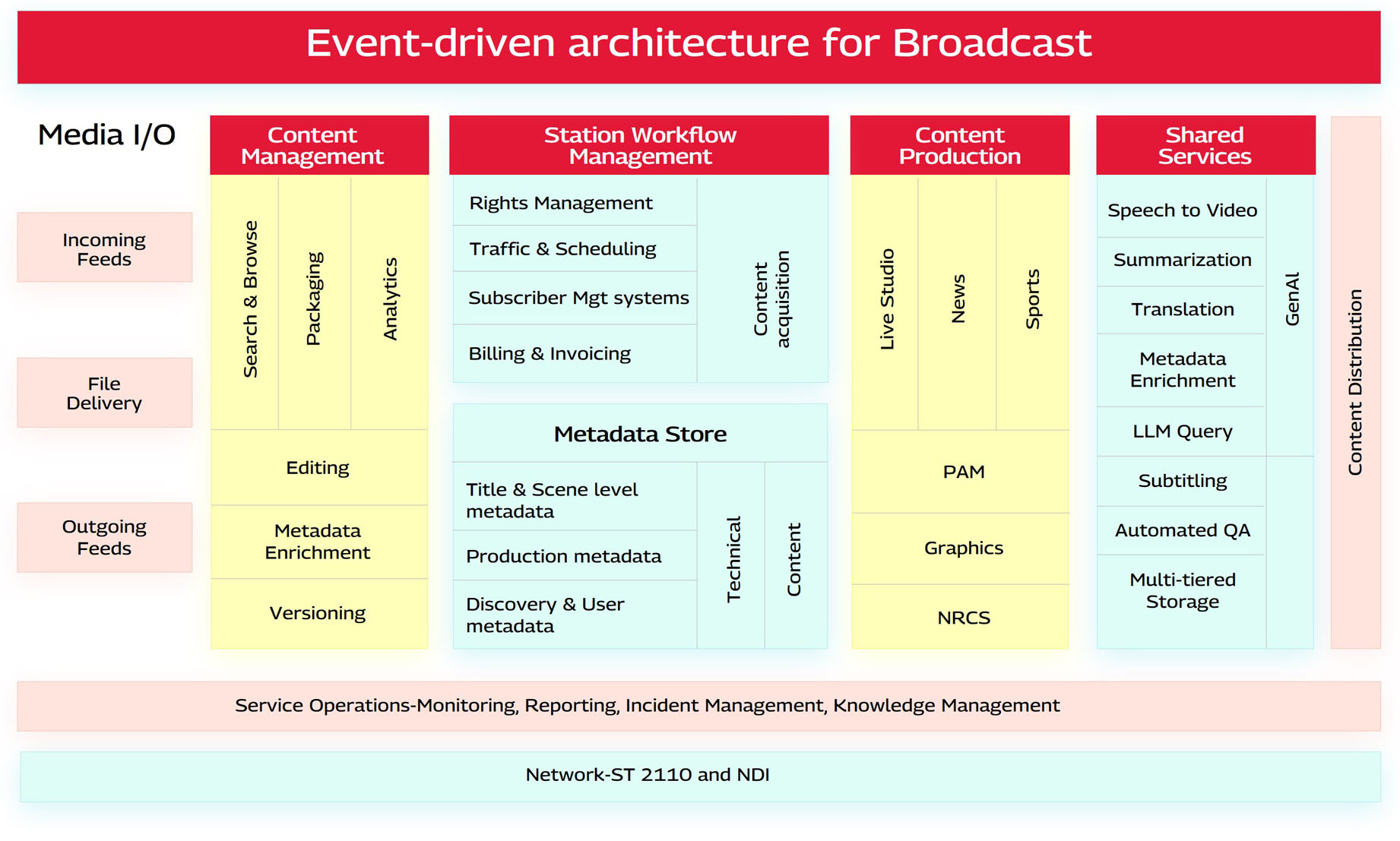

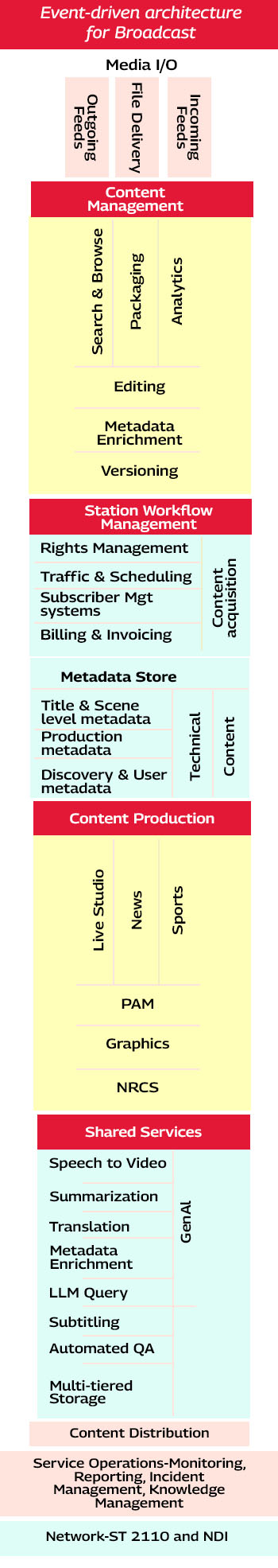

This blog post describes a reference architecture for a modern broadcast station based on cloud-first, Event-driven, and micro-services-based architecture. The video/audio network itself eschews traditional HD-SDI based baseband signal flows for IP-based ST 2110 and NDI. The architecture as seen in the figure below describes ‘capability blocks’ i.e. a collection of a set of core functionalities, with data flows between each of them to enable a flexible and extensible system. The heart of the facility design is the central interplay between the metadata, content management, and business logic capability blocks.

Media I/O: The leftmost block depicted in the figure above shows all media contributing to the production process that enters/leaves the facility does so through the media I/O capability block. This could be in the form of video files, live SRT/ NDI streams or even baseband video feeds. This also includes return feeds sent back to live locations and syndication partners.

Distribution Block: This is where file-based and live stream distribution of the final ‘on-air’ station content to DTH/ cable head ends, and OTT streaming platforms takes place.

Content Management: This capability block is responsible for the complete lifecycle of media assets within the station from ingestion to editing and archiving. Core media functions such as searching, versioning, editing, and enrichment of metadata take place in this block. All ingested and versioned content is also stored here. Packaging of hyper-personalized content for distribution also takes place here. The data exchange between this block and the station workflow management block ensures that rules pertaining to content rights management, versioning, and playout schedules are adhered to. Content security, access rights and compliance are enforced as well as integrations with third party applications are made with this block.

Metadata Store: The core of the station resides here with all metadata around content, schedules, and workflows stored in this block. This will include exhaustive technical and descriptive title and scene level metadata. This is designed to exist in the form of a data lake house containing a single-source-of-truth for all media data. Since this is an aggregate of metadata also sourced from OEM systems like production asset management (PAM) or graphics systems there has to be a master metadata scheme defined for the station that can be used by all data consumers.

Station Workflow Management: All business logic for the station from content planning and acquisition to managing the rights as well as playout and distribution scheduling resides in this block. Numerous integrations with third party systems also take place here namely invoicing, subscription management, audience measurement systems, EPG systems, Ad servers, MAM systems, and playout. The content acquisition block allows personnel to make requests for content from third party producers or internal stakeholders. This acquisition is then orchestrated via the media I/O block so content can be ingested.

The workflow engine provides a set of tasks available to be executed, provides API access to other layers, provides auditing and reporting on all activity within the station.

Shared Services: To drive maximum efficiency in workflows a common set of services such as GenAI led text to image generation, auto-summarization, translation, transcoding and even Metadata enrichment can be called via API calls made by the workflow engine. These are triggered based on events such as a video file arriving via the media I/O block, which then causes a QA, transcode and metadata enrichment service to be called.

Service Operations: All monitoring and control, incident management, KPI reporting, and knowledge management takes place in this capability block.

With an event driven architecture in place, all media supply chain processes can now be measured, benchmarked, optimized, and even costed for. This way a CFO knows exactly how much she is spending on internal services like metadata extraction, QA, or language translation each month. Turnaround times for Ad campaign reports delivered to advertisers are dramatically shortened if not eliminated altogether and subscribers/audiences are delivered highly personalized content by now having CRM, Metadata stores and CDPs interlinked.

When designs like the above are coupled with agentic workflows deploying GenAI, a legacy linear broadcast station has the potential to transform into a cloud-first, lean, highly resilient operation with minimal real estate footprint. The silver lining is that even in such a highly automated scenario, roles for humans-in-the-loop will still exist and likely consist of highly creative roles such as content strategists, investigative editorial teams, craft editors, brand strategists as well as gatekeepers of the final content being published.

Jay is an award-winning media executive. He heads the global M&E practice at TechM. Previously, Jay was the COO of a leading digital news business, founder of a digital healthcare startup as well as a music festival. Jay has twice received the Commonwealth Broadcasters award for innovative engineering. When not otherwise occupied, he can be found playing the guitar or exploring quantum computing.