The Era of Big Algorithms and Bigger Claims

An era where every board meeting starts and ends with a discussion on LLMs and gen AI is upon us. It’s become beyond a buzzword, and there is a one-upmanship frenzy. This frenzy started with GPT-based models in 2023 when OpenAI made its announcement, and now we are staring at another one, DeepSeek. Each new model claims to be better than the other, to have solved human-like reasoning in machines, and to be closer to Artificial General Intelligence (AGI). The truth, however, may be much farther.

My blog here highlights some fundamental changes that DeepSeek has made within their architecture, which is partly responsible for the frenzy but also gives an objective view of where we stand today. This blog also highlights the importance of a research-driven, services-based organization that we at Tech Mahindra strive to represent and how IT services stand to gain at each juncture of this change.

The Era of LLMs

Large Language Models (LLMs) or Small Language Models (SLMs) have become the cornerstone of artificial intelligence growth in the last two years, primarily because of the way they approach human language understanding. The holy grail of AI has been the ability to enable machines to understand and contextualize human language like the human brain does. LLMs, to an extent, do that by processing massive amounts of data, enabling the machine to communicate naturally with the person posing the query.

Why was this difficult earlier?

- First and foremost, language is natural and has no special form or rule. I can convey my message in one or more languages. It is the listener's relative intelligence that grasps its meaning.

- Human language is fluid and verbose.

- Words are not bound by rules. I can concoct words that do not exist in a given set or dictionary.

There were several attempts made in the past to consider language as symbols (For example, the word “CRAB” elucidates a sight, sound, and smell to an English listener, whereas the Greek word “Kavouras,” meaning crab, triggers the same response to a listener who knows Greek). It was wrongfully thought that language can be understood only if we consider it symbolic and replace the word in a sentence that will define the meaning. This symbolic representation gave way to neural networks, where words were converted into vectors, providing a better meaning as vectors could be manipulated mathematically.

The next step was to consider speech and sentences as a sequence of words that a human being thinks of and then expresses either verbally or in writing. This was interesting as it established algorithms like Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM). However, they also suffered from a major flaw - long sentences lost meaning due to contextual limitations.

Let me explain with an example.

Consider this sentence: Last summer, my wife and I decided to take a European holiday. After much deliberation, we decided to rekindle our romantic lives by going to the capital of romance - France. The country offers an eclectic blend of tourist spots and delicious food. We decided to train ourselves in the language of the land and started learning “________.”

For a human, it is natural to decipher that the answer is “French” because the language that the author is referring to does not require reasoning. It just comes naturally. For a machine, since the word “France” appears too early in the sentence, it makes it difficult for the model to retain context. Hence, algorithms like RNNs and LSTMs had to be discarded.

This was the era when the paper on attention came about, and the world completely changed. The attention paper, aptly titled “ Attention is All You Need,” was the lynchpin based on which today’s transfer architecture and LLMs sit.

The era of LLMs was upon us….

Simple Nuances Around LLMs

It is important that I go through some of the ways that LLMs are trained because these training methods define the changes that DeepSeek tries to make. If you find it too technical, here is the simplest explanation: DeepSeek has slightly changed the technique to train a base LLM model which gives them the compute advantage, so no great shakes there or is there?

- Data Curation: The first step before training any LLM model is to ensure the data we collect is of high quality, which would be used to train the LLM model. The data follows the philosophy of “Garbage In, Garbage Out.” When we curate this data, the following principles need to be considered:

- Allow Data Diversity: If training data comes from a single domain, the model becomes biased and gives answers skewed toward that domain. A data engineer’s first task is to create a balanced dataset.

- Quality Filtering: Filtering poor-quality data is important. This may involve removing non-legible words, foreign-language (non-native) words, excessive punctuations, signs and symbols, HTTP-based URLs, etc.

- De-Duplication: It is important to remove duplicates as the meaning tends to get lost, or as they say, the LLMs tend to produce repetitive answers.

- Privacy Redaction: Sensitive Personal Information (SPI) and Personally Identifiable Information (PII) must be removed.

- Tokenization: Tokenization is the process of creating words into embeddings and then into tokens or sub-words. Much has been said about this earlier, so I will not go into details. However, a token is not a word. It represents a sub-word, which, when broken, enables us to represent more words with that token. An example is jump, jumping, and jumped. If we were to convert each word into a token, then we have three. Using tokenization, we can have a token - “jump”- and all verb prepositions can be used as another token. While it appears that we created more tokens than words, these small sub-words, like -ed, -ing, etc., are also then used with the word vocabulary to train LLMs, which run in trillions. We use the Byte Pair Encoding technique to do the same.

- Deciding the Model Architecture: Deciding the model architecture is important as it represents some nuanced changes in the code and some parameters which may change. Model architecture may mean GPT-based, Llama-based, Qwen-based, or similar architectures.

- Pre-Training the Model: This is the first step in which neural networks, transformers, and attention play a part. The first model, sometimes called the base LLM, is built when we create a pre-trained model. Its purpose is to predict the next word in any given sentence. This is a very powerful way to be an autocomplete model structure. Remember the “French” example I gave earlier. A pre-trained model could resolve that issue as it has an in-built attention mechanism. A pre-trained model is good, but it cannot have a conversation with the user. Given a question like “Is eating garlic with high BP okay,” the model will not be able to answer because it has never been trained to do that. The model will simply complete the sentence with any sentence beyond it as it has learned, such as “BP can be measured after you have eaten garlic.” It does not give any indication of measure. The expectation here was a simple yes or no.

- (*) Supervised Fine-Tuning (SFT): To resolve the earlier part, the base model is then tuned using the SFT technique. A human annotator compiles a list of Questions and Answers (Q&As) and trains the model to generate accurate responses. This allows the model to now consider this as a conversation.

NOTE: I want you to remember this step as this is where DeepSeek-R1-Zero changes course. - (*) Aligning the Model: While in the previous step, the model has acquired Q&As and evolved from a simple word completion system, the model can still hallucinate and provide wrong answers. Therefore, the model needs to align itself to provide the right answer to a question. This involves annotation, where a series of prompts are given to the SFT-trained model and the LLM responds with an answer. A human annotator is used to adjudge the best response, which is then used to further train the model.

It's not as simple as it sounds, though. The alignment of the model is the place where the maximum innovations are possible. I would provide three facets of where alignment was done differently - one by OpenAI and by Anthropic, which started this base, one by us during Project Indus, and one by DeepSeek. The techniques used by us and DeepSeek enable us to save the cost of computing, which does not mean that OpenAI and Anthropic did not do this right. These are algorithmic variations that produce results.

NOTE: I want you to remember this step as this is where DeepSeek-R1-Zero, R1, and Project Indus change course.

Alignment: The Big Tamale

Alignment has been the holy grail as it requires a lot of computing power apart from SFT. Alignment means ensuring the model is good enough to answer questions posed through prompts. These prompts may be general questions. Models may get aligned to read from a document or expected to answer questions about mathematical functions, etc.

Alignment has traditionally been done with a technique called Reinforcement Learning using Human Feedback (RLHF), also made popular by OpenAI and Anthropic. I’ll try and explain all the techniques in layman’s terms, but I’ll also add some formulae for the same. Please don’t get perturbed by them. They are to make a case in point and not to dissuade the reader.

Reinforcement Learning using Human Feedback (RLHF)

This is a technique made popular by OpenAI and Anthropic but not a very simple one to hone. So, we now have a base model available that is supervised and fine-tuned (SFT-ed) with some Q&A data. When you ask this model a question, it responds, but you feel the answers are not as expected. Therefore, you have to align. This is where human annotations and associated costs come in. A set of prompts is given to the base LLM, and the LLM responds with answers. Humans annotate the result to decide if the answers are good or bad. This distinction is then taken, and a reward model is trained. Simply put, a reward model is a model that gives more reward to the right answer and less to the wrong answer, and by subsequent training, the model learns to answer more accurately.

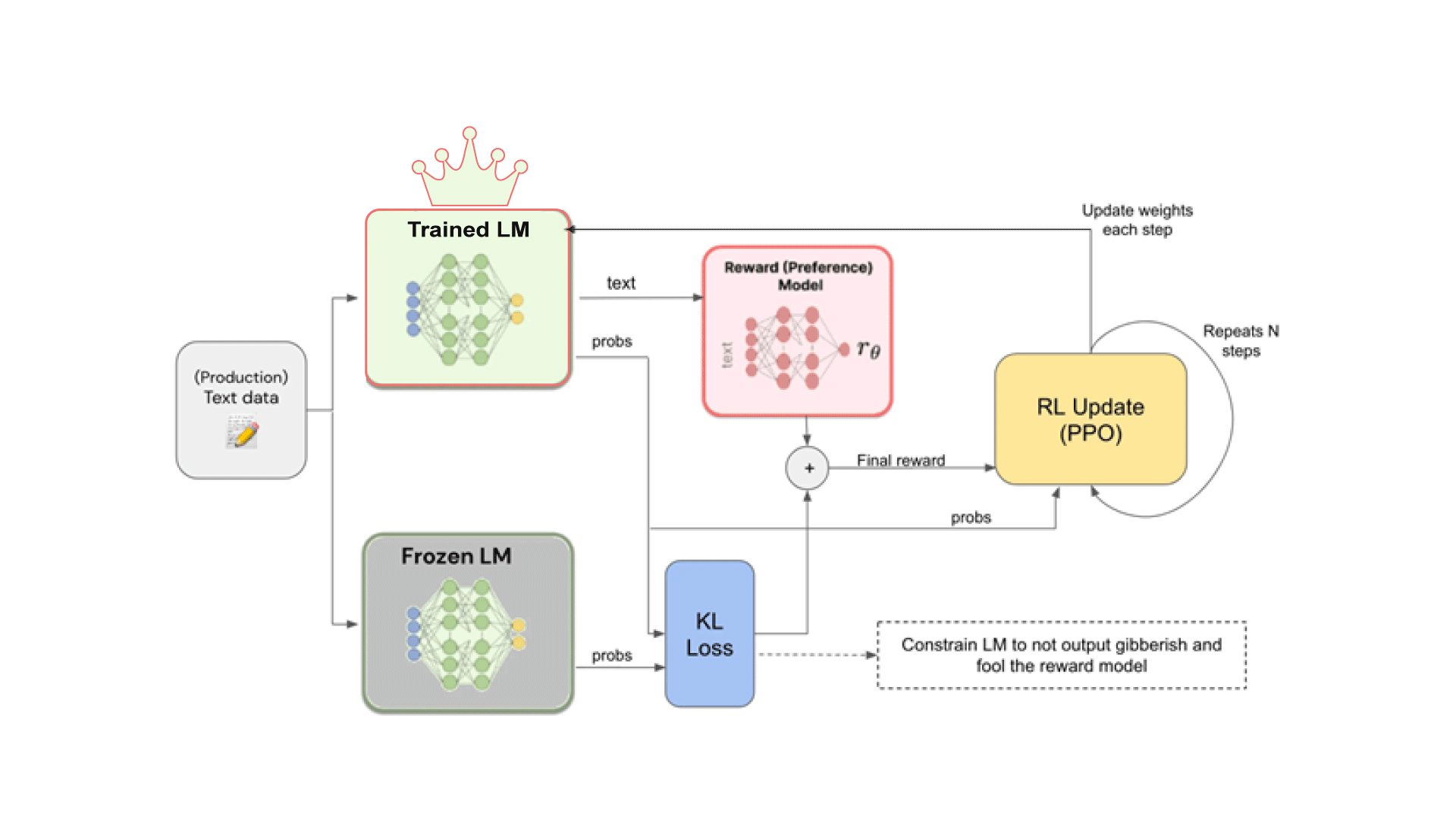

If I were to explain this with a simple illustration, it would appear like this:

Fig 1: A reward model illustration

Please don’t get perturbed here. What happens is simply this:

- The base model, which was SFT-ed, is frozen, and a separate model is also taken as a clone.

- Prompts are fed to both the frozen LLM and the trained LLM, and the results are produced.

- From the trained LLM, the answers are taken to the reward model to give a reward to the answer (Remember: The reward model was trained initially to provide the right answers).

- Sometimes models get greedy and they try and put words which make rewards higher. This phenomenon is called “reward hacking.” To avoid this, the frozen model is also used as a base and the answers provided by trained and frozen LLMs are compared using a Kuber Liebert Loss (KL) mechanism to see how much the answer deviates from the frozen model.

- A loop of reinforcement learning runs continuously to ensure weights are updated, and the trained model eventually learns to answer right.

While RLHF performs brilliantly well and has done this for, let’s say, OpenAI, there are some challenges:

- Associated costs are due to the fact that we are training three LLMs together (or three models).

- Reinforcement learning sometimes doesn’t converge and goes on in an endless loop, which can be cost-prohibitive.

- The number of parameters may lead to a lot of computation.

Direct Preference Optimization (DPO): Model used by Project Indus

DPO is an advanced technique developed by Stanford University. Unlike RLHF, which requires training three models simultaneously, DPO streamlines the process by performing a direct preference check to improve model responses. It addresses RLHF’s key disadvantages by simplifying alignment. While the process of providing prompts to the base model and receiving responses remains unchanged, and human annotation is still involved, DPO introduces a more efficient method for distinguishing between good and bad answers.

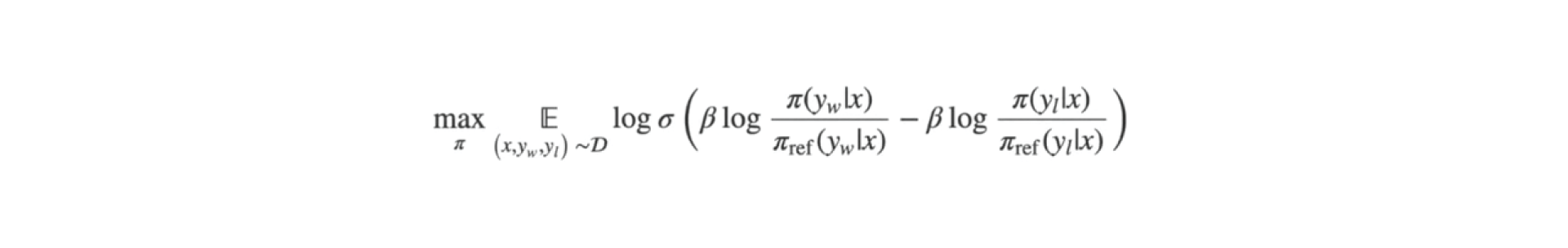

DPO uses the following formula:

Again, I don’t want the reader to be perturbed here. It differentiates between bad and good answers and tries to find the gap in one go rather than going through reward policy mapping.

Group Relative Policy Optimization (GRPO): Model used by DeepSeek

Probably the biggest innovation from DeepSeek is using a different technique from alignment, and this is where GRPO comes in. All my statements below are from the DeepSeek paper, so the readers are free to go through it.

- DeepSeek interestingly calls its models “reasoning models," and they appear to reflect that. They have two models – DeepSeek-R1-Zero and DeepSeek-R1.

- It misses one SFT step and jumps directly into large-scale reinforcement learning. This showed remarkable reasoning capabilities, but it comes with poor readability and language mixing (it is for this reason that Project Indus started with one language).

- DeepSeek-R1 was thus introduced with multi-stage training and cold-stage data.

- DeepSeek has six dense models (1.5B, 7B, 8B, 14B, 32B, 70B) distilled from DeepSeek-R1, based on Qwen and Llama architecture. Distillation is what is causing it to be cheaper. The goal was to establish reasoning capabilities, without supervised data focusing on self-evolution using the RL process.

- The technique applied is GRPO, which is applied on top of Proximal Policy Optimization (PPO). It appears to work better than the OpenAI technique of going through SFT and then applying RLHF as a chain of thought. GRPO, a variant of PPO, enhances mathematical reasoning abilities while concurrently optimizing the memory usage of PPO.

- Simplified Training: Unlike PPO, GRPO eliminates the need for a separate value function model. This simplifies training and reduces memory usage, making it more efficient.

- Group-Based Advantage Calculation: GRPO leverages a group of outputs for each input, calculating the baseline reward as the average score of the group. This group-based approach aligns better with reward model training, especially for reasoning tasks.

- Direct KL Divergence Optimization: Instead of incorporating KL divergence into the reward signal (as in PPO), GRPO integrates it directly into the loss function, providing finer control during optimization.

Conclusion

DeepSeek follows a long line of models that leverage innovative alignment and model-building techniques to reduce costs. While the core algorithms remain largely unchanged, and most techniques outside of alignment stay the same, the approach to alignment introduces efficiency. Similar innovations have been seen in the past, where model creators have explored alternatives to RLHF to achieve lower costs.

Where Do Indian IT Services and Research Stand

Representing as a Chief Innovation Officer (CIO) of a large IT services company, this question is never easy to answer. There are strengths and areas of improvement.

The strengths that we showcased:

- I am personally proud that we have managed to build an LLM ground-up, through extensive fundamental research and ensuring data is done right.

- Research could only make us do what we did and was carried out for three months before any effort was made to model it.

- We then placed this model on CPUs for inferencing, and we used Intel's Xeon 6 processors to demonstrate to the world that it can be done at a fraction of the cost.

The areas of improvement or things to do:

- I think we haven’t marketed our craft well, and this needs to change.

- IT services organizations need to undertake more research.

- We should focus on foundational LLMs, ensuring dialects are embedded while also exploring alternative architectures for cost efficiency. India is often seen as divided into India and Bharat. For some Bharat is rural and India is urban, but I see “Bharat” as anyone whose first language is their native tongue. To capture the true emotion and cognition of these individuals, a dialect-based foundational model is crucial.

- There should also be a push for SLMs to run on Edge devices like chips and PCs. This will lay the foundation for advancements in education, infotainment, and physical systems we interact with daily.

- Current models still do not reason like humans. To move toward Artificial General Intelligence (AGI), models need to reason more like us. Hence, the push towards neuro-symbolic AI, or as some people refer to it as “Good Old-fashioned AI (GOFAI)” is important. It combines neural network architectures with symbolic reasoning, making it more efficient and cost-effective. A great example of this approach is Siri, which is based on symbolic reasoning.

- Comprehensive research into neuro-symbolic architectures is essential to advance further.

The changes that DeepSeek has brought validate the essential nature of IT services and how they can serve as a glue between the infrastructure and the cloud. The role of IT services has suddenly changed from being a consumer to now a tech creator with partners in the ecosystem.

Our Test on DeepSeek

Like any inquisitive team on the edge of research, we tested the DeepSeek model and found the following observation.

- The website was under heavy load and was rarely answering questions. Out of the 10 prompts we gave, we only got an answer 2 times. This was after waiting for 30-60 seconds.

- The web variant seems to be on par with ChatGPT with its response quality, keeping the response times apart due to the server load.

- The variants available for local use (1.5B, 7B, 8B, 14B, 32B, and 70B) are distilled versions of the Qwen and Llama models. The original Deepseek model has 671B parameters that cannot be run locally.

- On LM Studio, none of the original deepseek models are supported. However, there are models that the LM Studio community has converted to GGUF format to be supported.

- The model seems to have a thought component and then uses that to refine the result printed to the user. This is a slight push towards agentic workflows.

Nikhil has been a researcher all his life and is now leading the growth of AI and Quantum Computing research within Tech Mahindra. His area of business research is how quantum Computing, AI, and neuroscience would inspire the growth of AI and the next change in society, business, and humanity. He has won numerous awards, including the 2020, 2021, and 2023 Innovation Congress awards, for being the most innovative leader in India.

MoreNikhil has been a researcher all his life and is now leading the growth of AI and Quantum Computing research within Tech Mahindra. His area of business research is how quantum Computing, AI, and neuroscience would inspire the growth of AI and the next change in society, business, and humanity. He has won numerous awards, including the 2020, 2021, and 2023 Innovation Congress awards, for being the most innovative leader in India.

Nikhil is also a TEDx speaker and the author of a best-seller book – Courage, the Journey of an Innovator. One of his long-standing visions has been to enable machines to talk in the local Indian dialects. Most notably, he has spearheaded Project Indus, Tech Mahindra's seminal effort to build Indic LLM (homegrown large language model), which was successfully launched globally in June 2024.

Nikhil holds a master's degree in computing with a specialization in distributed computing from the Royal Melbourne Institute of Technology, Melbourne, and is an avid physicist.

Less